Introduction

We’re living in the Digital Age, where artificial intelligence (AI) is one of the media’s favourite buzzwords and our inboxes are flooded daily with promotional material about the latest AI-driven tools that promise to make our lives and jobs easier. As the development and deployment of AI rapidly accelerates, spreading through industries and collecting data faster than we can keep up with, policymakers around the world are scrambling to come up with guidelines and laws that appropriately and effectively address the risks presented by AI.1

Just last month, the Australian Federal Government released the Voluntary AI Safety Standards, consisting of 10 voluntary guardrails that apply to all organisations throughout the AI supply chain2, together with a proposal for introducing mandatory guardrails for AI in high-risk settings.3 This week, the Office of the Australian Information Commissioner released privacy guidance for organisations and government agencies using commercially available AI products.4

Over in the European Union, the Artificial Intelligence Act (Regulation (EU) 2024/1689) (EU AI Act) is the world’s first comprehensive legal framework for regulating the development and ethical use of AI. Applying a risk-based approach to AI systems, it outlines mandatory requirements for organisations that develop and deploy AI in the EU, and significant penalties for non-compliance, which will be implemented gradually over the next two years.

For Australian organisations who are using (or considering using) AI, and have (or may eventually have) clients in the EU, there is no better time than the present to ensure that you understand your obligations under the EU AI Act. This article provides a brief overview of the EU AI Act’s application, risk categories, timeline and penalties, rounding out with practical considerations for minimising AI-related risk exposure and maintaining compliance.

The EU’s new AI Act

Who does it apply to?

The EU AI Act has extraterritorial reach. It applies to individuals and organisations, both within and outside of the European Union, who5:

- develop an AI system or general purpose AI (GPAI) model, or have an AI system or GPAI model developed, and market it under their own name or trademark (Provider);

- use an AI system, except where such use is in a personal, non-professional capacity (Deployer);

- otherwise import or distribute an AI system or GPAI model within the EU (Importer and Distributor).

Australian individuals and organisations will fall under the ambit of the EU AI Act if they develop or use an AI system or GPAI model in a professional capacity (i.e. not for personal use), and individuals or organisations in the EU can interact with that AI system or GPAI model.

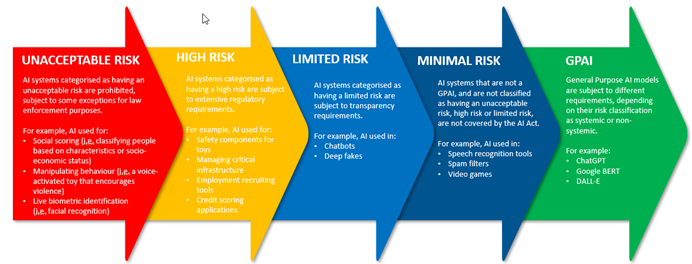

AI systems categorised by risk

Under the EU AI Act, AI systems are classified according to four risk categories – Unacceptable, High, Limited and Minimal. The greater the risk posed by the AI system, the stricter the regulatory requirements on the Provider, Deployer, Importer or Distributor will be.

| Unacceptable or Prohibited Risk | AI systems that are considered a threat to people are categorised as having an ‘unacceptable’ risk and are therefore prohibited. This includes AI systems that manipulate decision-making or exploit vulnerabilities (for example, a voice-activated toy that encourages children to engage in dangerous behaviour), or are designed for the purpose of social scoring, biometric identification and categorisation (for example, evaluating or classifying people based on social behaviour or personal characteristics to predict a person’s risk of committing a crime), subject to exceptions for law enforcement purposes.6 Another prohibited use of AI includes the untargeted scraping of facial images from social media platforms, which has recently been a subject of discussion before Australia’s Select Committee on Adopting Artificial Intelligence, after Meta admitted to the facial scraping of Australian users’ public Facebook profiles with no opt-out option.7 In a construction setting, a prohibited use might include the non-consensual biometric identification and categorisation of construction workers using CCTV footage of a construction site. |

| High Risk | AI systems are categorised as having a ‘high’ risk if they pose a significant risk to individuals’ health, safety or fundamental rights.8 This includes AI systems that form part of a safety component of a product, or are a product, that is covered by the EU’s product safety legislation, such as cars, lifts and medical devices, or AI systems in specific industries, such as the management and operation of critical infrastructure, law enforcement or migration, asylum and border control, that are required to be registered in a database.9 High risk AI systems are subject to strict risk assessment, record-keeping and transparency requirements.10 |

| Limited Risk | AI systems categorised as having a ‘limited’ risk, such as chatbots and deepfakes, are subject to transparency obligations centred on user awareness.11 For example, if an accounting business uses an AI-powered, automated customer service tool to answer tax-related questions on its website, the use of AI must be disclosed to users. |

| Minimal Risk | AI systems categorised as having a ‘minimal’ risk, such as those used in spam filters, generating music or TV show recommendations or as virtual assistants for non-critical tasks, are not subject to any regulatory requirements under the EU AI Act.12 For example, using AI within a government department to assist with the administrative task of scheduling appointments for permit applications and licence renewals. |

For GPAI models, such as ChatGPT, Google BERT and Dall-E, which have a broad range of applications and can perform a wide variety of tasks, Providers’ obligations depend on whether they pose a ‘systemic’ or ‘non-systemic’ risk.13 A GPAI model poses a systemic risk if the Commission, ex officio or a scientific panel decide, on the basis of an evaluation using appropriate technical tools and methodologies, that it has the ’high impact capabilities’ (i.e. potential for large-cale or cross-sector harm).14 Regardless of the classification, all GPAI models are subject to transparency-focused obligations in relation to technical documentation, information about the content used for training purposes and compliance with copyright laws.15

Staggered timeline

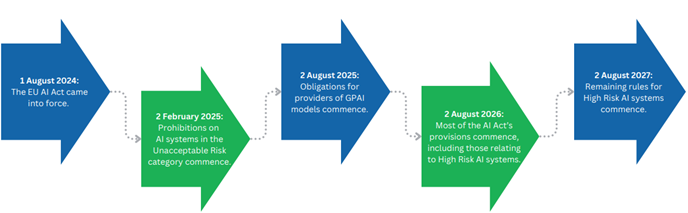

While the EU AI Act came into force on 1 August 2024, none of the requirements apply just yet. There is a staggered implementation timeline from February 2025 through to August 2027.16

| 2 February 2025 | Prohibitions on AI systems with an ‘unacceptable’ risk and introductory provisions start to apply. |

| 2 August 2025 | Obligations for providers of GPAI models, as well as certain other provisions relating to notified bodies, governance of the EU AI Act, confidentiality of information obtained by the Commission and others for the purposes of carrying out the EU AI Act, and penalties, start to apply. |

| 2 August 2026 | The remainder of the EU AI Act starts to apply, except Article 6(1). |

| 2 August 2027 | Article 6(1), the final requirement for high risk AI systems, starts to apply. For AI systems that form part of a safety component of a product, or are a product, that is covered by the EU’s product safety legislation, such as cars, lifts and medical devices, this includes the requirement for a third-party conformity assessment prior to marketing or using the product. |

Penalties for non-compliance

There are significant penalties for non-compliance with the EU AI Act, depending on the risk category of the AI system and the severity of the violation.

| Prohibited AI Practices / Unacceptable Risk | Individuals or entities who fail to comply with the prohibited AI practices may be subject to fines of up to €35,000,000.00 (approx. $57,000,000.00) or 7% of total annual turnover for the preceding financial year, whichever is higher (or whichever is lower for SMEs).17 |

| Provisions Concerning Operators or Notified Bodies | Individuals, entities or notified bodies who fail to comply with certain provisions, including transparency obligations for limited risk AI systems, may be subject to fines of up to €15,000,000.00 (approx. $24,100,000) or 3% of total annual turnover for the preceding financial year, whichever is higher (or whichever is lower for SMEs).18 |

| Supply of Incorrect, Incomplete or Misleading Information | Individuals or entities who supply “incorrect, incomplete or misleading information to notified bodies or national competent authorities in reply to a request” may be subject to fines of up to €7,500,000.00 or 1% of total annual turnover for the preceding financial year, whichever is higher (or whichever is lower for SMEs).19 |

| GPAI Providers | Providers of GPAI models who intentionally or negligently breach the EU AI Act may be subject to fines of up to €15,000,000.00 (approx. $24,100,000) or 3% of total annual turnover for the preceding financial year, whichever is higher.20 |

| EU Institutions, Bodies, Offices and Agencies | EU institutions, bodies, offices and agencies who fail to comply with provisions of the EU AI Act may be subject to fines of up to €1,500,000.00 (approx. $2,400,000.00) for breaches of prohibited AI practices, or up to €750,000.00 (approx. $1,200,000.00) for breaches of other requirements or obligations.21 |

Practical considerations for Australian businesses

Now that we’ve covered the key aspects of the EU AI Act – what it is, who it applies to, the risk categories for AI systems and the potential penalties for non-compliance – it’s time to think about what this all means, in a practical sense, for Australian organisations to which the Act applies.

Key AI-related risks

AI has the potential to significantly enhance an organisation’s capabilities, but with amplified opportunity comes amplified risk. There are three key risks to be aware of when using AI within your organisation:

| Operational Risk | Reputational Risk | Legal & Compliance Risk |

|

Where an AI system is inadequately trained, tested or supervised, or fails to perform as intended, this may result in a disruption to daily operations, inefficient business processes and/or financial loss. For example, if an AI system used for inventory management generated inaccurate predictions about stock levels or sales forecasts, this could lead to supply chain issues, dissatisfied customers and a loss of revenue. Managing operational risks requires robust oversight and continuous monitoring of AI systems to ensure that they align with business objectives and function reliably. |

Where an organisation uses an AI system, or acts in a certain way as a result of using AI, that is perceived as unethical or harmful, this may cause damage to public perception. For example, using a biased AI system that leads to unfair hiring practices. Trust is critical to an organisation’s success, and it is therefore important that organisations proactively manage reputational risks arising from the use of AI by ensuring transparency, inclusivity, fairness and accountability in AI-assisted business processes. |

Organisations need to be aware of, and adhere to, local and international regulations governing AI use. An obvious example is the EU AI Act, but there are several legal frameworks that operate in parallel with the use of technology and AI, such as cybersecurity, data privacy, discrimination or intellectual property laws. Non-compliance may result in significant penalties or legal action. Organisations should stay informed about evolving regulations, and seek legal advice on risk exposure and mitigation strategies, to ensure AI systems comply with regulatory standards. |

Practical steps to minimise and manage risk exposure

While the risks associated with AI – operational, reputational, legal or otherwise – are significant and should not be underestimated, they are by no means insurmountable. The key is to formulate a balanced strategy to AI that is both informed by the organisation’s data governance framework and capable of being understood and implemented by the Board and broader team:

- Maintain a robust data governance framework: AI systems rely heavily on data, which means that implementing, verifying and maintaining a robust data governance framework is critical to managing an organisation’s AI-related risks. From collection, access and use, through to storage, security and destruction, organisations should have clear policies and procedures in place to ensure that data is handled responsibly.

- Formulate an AI strategy: Formulating an AI strategy enables organisations to adopt AI in a controlled and purposeful way, avoiding exposure to unnecessary risks or compliance issues. A well-defined AI strategy starts with an understanding of where AI can add value to an organisation, and the potential risks specific to an organisation’s industry and operations. It should outline the organisation’s approach to implementing, assessing and monitoring the use of AI, as well as key responsibilities for overseeing AI-related tasks. The Board is required to have active oversight in the same way it does for cyber and finance.

- Build an appropriately skilled team: To effectively use, and provide oversight of, AI in the workplace, employees should be sufficiently trained to understand how the technology works, how to interact with it, how to interpret an AI system’s output, and how to monitor for potential issues. By investing in employee education and training, organisations can create a future-focused workforce capable of providing the necessary oversight of AI-driven processes, maximising AI’s value while reducing the likelihood of operational, reputational or legal missteps.

Key Takeaways

- The introduction of the European Union’s AI Act presents a timely opportunity for Australian organisations trading within the EU to ensure that they understand, and are complying with, the mandatory requirements for developing and using AI systems.

- The EU AI Act classifies AI systems according to four risk categories – Unacceptable, High, Limited and Minimal. The greater the risk posed by the AI system, the stricter the regulatory requirements will be. There are significant penalties for non-compliance.

- AI can significantly enhance your organisation’s capabilities, but with amplified opportunity comes amplified risk. Organisations should be aware of key AI-related risks in relation to business operations, reputation and legal / compliance.

- Organisations can minimise and manage risk exposure by maintaining a robust data governance framework, formulating an AI strategy and building an appropriately skilled team.

Footnotes

- In late 2020, the Attorney-General’s Department commenced a comprehensive review of the Privacy Act 1988 (Cth), which involved significant public consultation. The Law Council of Australia, with the assistance of the Law Society of New South Wales, Queensland Law Society and the Law Institute of Victoria, provided a submission to the Attorney-General’s Department on 10 April 2024: https://lawcouncil.au/resources/submissions/doxxing-and-privacy-reforms.

- Australian Government, Dept of Industry, Science and Resources, Voluntary AI Safety Standard (5 September 2024) https://www.industry.gov.au/publications/voluntary-ai-safety-standard#about-the-standard-1.

- Australian Government, Dept of Industry, Science and Resources, Introducing mandatory guardrails for AI in high-risk settings: proposal paper (5 September 2024): https://consult.industry.gov.au/ai-mandatory-guardrails.

- Office of the Australian Information Commissioner, Guidance on privacy and the use of commercially available AI products (21 October 2024): https://www.oaic.gov.au/privacy/privacy-guidance-for-organisations-and-government-agencies/guidance-on-privacy-and-the-use-of-commercially-available-ai-products.

- AI Act, Article 2 (Scope) and 3 (Definitions).

- AI Act, Chapter II (Prohibited AI Practices).

- ABC News, Facebook admits to scraping every Australian adult user’s public photos and posts to train AI, with no opt-out option (11 September 2024): https://www.abc.net.au/news/2024-09-11/facebook-scraping-photos-data-no-opt-out/104336170.

- AI Act, Chapter III (High-Risk AI System), Annex I (List of Union Harmonisation Legislation) and Annex III (High-Ris AI Systems Referred to in Article 6(2)).

- AI Act, Article 6 (Classification of AI systems as High-Risk); European Parliament, EU AI Act: first regulation on artificial intelligence (published 8 June 2023, last updated 18 June 2024).

- European Commission, AI Act (undated): https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- AI Act, Chapter IV (Transparency Obligations for Providers and Deployers of Certain AI Systems); European Commission, AI Act (undated): https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- EU Artificial Intelligence Act, High-level summary of the AI Act (27 February 2024): https://artificialintelligenceact.eu/high-level-summary/

- AI Act, Chapter V (General-Purpose AI Models).

- AI Act, Article 51 (Classification of General-Purpose AI Models as General-Purpose AI Models with Systemic Risk); European Parliament, EU AI Act: first regulation on artificial intelligence (published 8 June 2023, last updated 18 June 2024).

- AI Act, Article 53 (Obligations for Providers of General-Purpose AI Models).

- EU AI Act, Article 113 (Entry into Force and Application); EU Artificial Intelligence Act, Implementation Timeline (last updated 1 August 2024): https://artificialintelligenceact.eu/implementation-timeline/

- EU AI Act, Article 99(3), (6) (Penalties)

- EU AI Act, Article 99(4), (6) (Penalties). The relevant provisions are Articles 16, 22, 23, 24, 26, 31, 33, 34 and 50.

- EU AI Act, Article 99(5), (6) (Penalties).

- EU AI Act, Article 101 (Fines for Providers of General-Purpose AI Models).

- EU AI Act, Article 100 (Administrative Fines on Union Institutions, Bodies, Offices and Agencies).